As global vehicle manufacturers strategize to develop and perfect autonomous driving capabilities, management systems that allow these organizations to protect the most important assets of autonomous driving capabilities — data and the AI models that enable them — are needed.

Enter ISO/IEC 42001: the first international standard for managing AI Systems. In this article, I will specify where artificial intelligence plays a role in autonomous driving development and how vehicle manufacturers can leverage ISO42001 controls to safely develop, deploy, and continuously improve their autonomous driving efforts.

Artificial Intelligence in Autonomous Driving: Where It Plays a Role

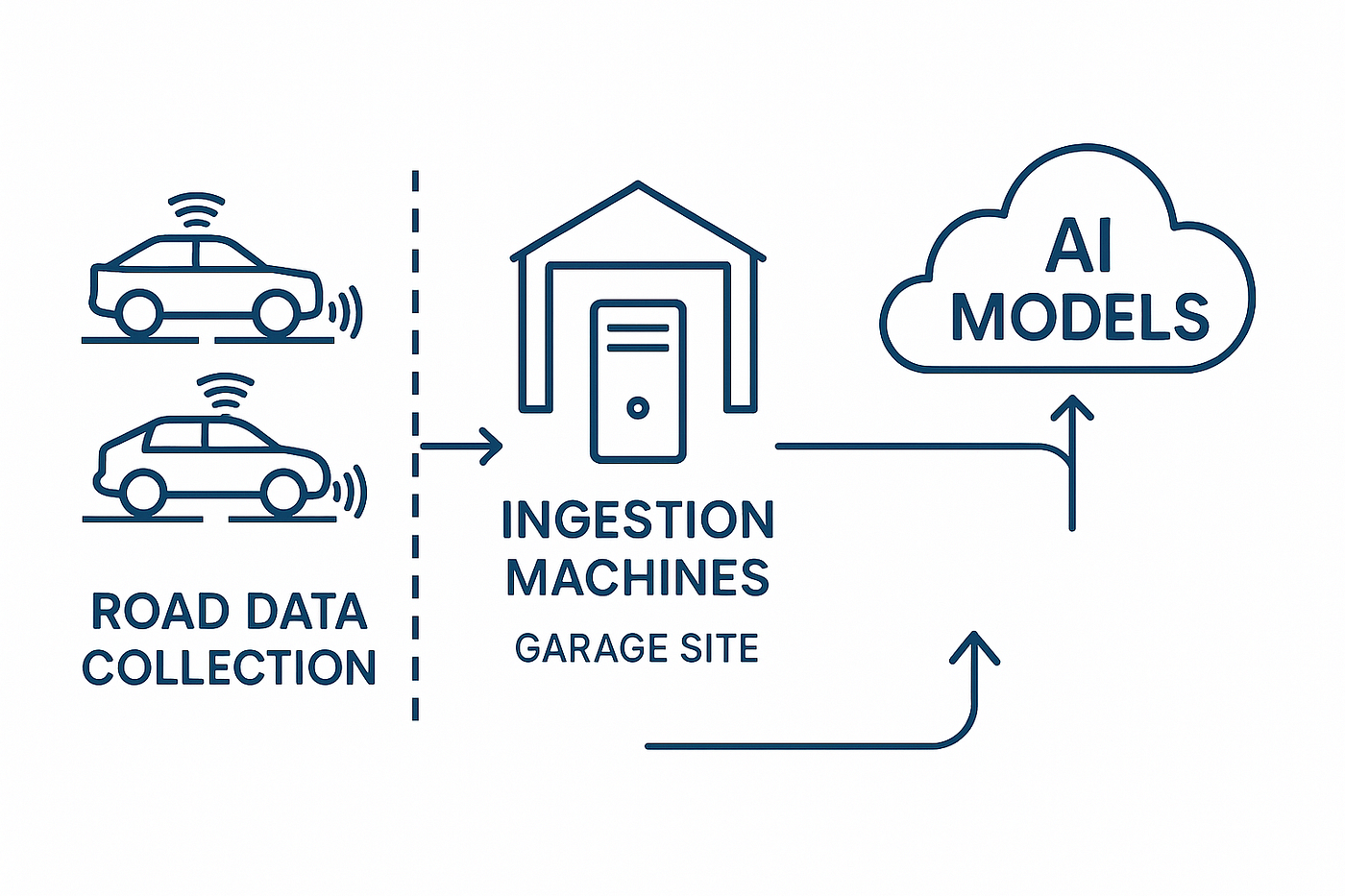

As many are aware, AI is developed with large sets of training data. Popular AI tool ChatGPT is used to generate human language, so it is trained on massive datasets of text from websites, articles, books, etc. Regarding autonomous driving development, the purpose is to perceive and navigate the real world safely, so it is trained on datasets of video, GPS, and annotated driving scenarios recorded from radars and cameras placed on vehicles.

Unique ISO 42001 Controls for Autonomous Driving Environments

Most information security and risk management professionals will be familiar with ISO27001 (Information Security Management) control requirements for the proper development of policies, defined roles/responsibilities, and other required governance. In this section, I want to focus on the controls unique to ISO42001 and what the workflows would look like in organizations developing autonomous driving solutions.

Control - A.4 Resources for Ai Systems

Resources include the data collected, tooling (software), systems, and computing resources.

While this appears to be obvious on the surface, not properly tracking resources can compound into serious issues that threaten the sustainability of the AI Management System.

Documentation of systems and computing resources is rather straightforward. It’s the standardization of all Desktops, Servers, Human Machine Interfaces (HMIs), and cloud infrastructure that will be used to ingest the data obtained from the vehicles.

Tooling requires a more proactive change in processes. Most organizations will have a vendor security or “third-party” procurement process where the engineers request specific software to do their job. Legal and Privacy teams will review how the vendor handles the data, the security team will review the feasibility of integrating the tool into the environment, and then it is either improved or denied.

Where most processes lack is defining a person who is responsible for the tooling. What is the objective of the tool relative to the AI objectives? What teams should have access to the tool? Who is responsible for reviewing the future relevance and decommissioning of the tool? What data does the data access? These questions are often not addressed, let alone documented, because it seems insignificant to those initially requesting the tool/software. However, after a year or two of multiple teams requesting tooling for different reasons, the organization ends up with a bunch of AI software they don’t have full visibility of. Imagine dozens of various AI software: deployment platforms, simulation software, data labeling, and no one is aware of which tool is used by whom and for what purpose? Substantial potential for wasting money, and/or not properly securing tooling or data.

Solution: During the third-party/vendor acquisition process, organizations should integrate a process that documents the purpose of the tool, owner of the tool, the data accessed by the tool, and any other relevant information to the org’s ai objectives. This document should be available to top management, engineers, privacy teams, and other relevant interested parties.

Control — A.5 Assessing Impacts of Ai Systems

The ISO42001 Standard references ISO42005 AI System Impact Assessment for outlining how to perform a structured impact assessment specifically for AI systems.

It should be understood that this established process overlaps with the simple documentation of resources. For example, Section 5.2 of ISO42005 states, “The organization should document the process for completing AI system impact assessments.” Section 6.3 of 42005 states, “The AI system impact assessment should include a basic description of the AI system, describing what the AI system does and, at a high level, how it works.” I will be leaving out these portions of the Standard and focusing specifically on where it advises on the impact assessment guidelines.

AI System Impacts contain 3 main components according to ISO42005:

Data Risk Assessments

Model Risk Assessments

Impact Mapping of Benefits and Harms to Persons or Groups

Data Risk Assessments

Section 6.4.2 States

“When documenting datasets used in AI systems in an AI system impact assessment, the organization should consider its data quality, data governance, security, and privacy processes in relation to such datasets.”

As stated earlier in the article, autonomous vehicle development obtains its datasets by manually driving vehicles on roads to obtain video, GPS, and annotated driving scenarios recorded from radars and cameras placed on vehicles, so where does the risk enter?

- The hardware itself. Since the vehicles ingest the data from LiDAR sensors themselves impact assessments should review the potential for that data to be corrupted at the ingestion point. Many LiDAR sensors come with ethernet connection ports, which means internet connectivity, which means the potential for malicious modification of the dataset. Secure handling and configuration of LiDAR sensors should be enforced from the start to prevent the ai AI-inspired metaphor “garbage in, garbage out”. Meaning poor data ingested into the training model, will result in poor output from the training model. A safety concern when we’re working with ai models meant to autonmously drive vehicles.

Model Risk Assessments.

Section 6.5.1 States

“The organization should document information around such algorithms and models, including their development, to ensure that reasonably foreseeable impacts related to them can be considered and assessed.”

Large Language Models, such as ChatGPT might be the most familiar ai model types. However in the development of autonomous driving, the ai models are steering a vehicle. This creates a uniquely different landscape of risk that apply to the models.

Failure in edge cases: What happens when the AI Models processes a fallen tree on the road, or a road with temporary construction impediments such as cones or handwritten signs? Over-reliance on simulation-trained data or data developed on a “closed course” can increase the risk of the autonomous driving model making incorrect decisions when faced with these “edge cases”.

Stale Autonomous Driving Model: What happens when driving laws are updated, or new road patterns are introduced? These are impacts that should be documented and accounted for when making decisions. Particularly on where to deploy the model.

Impact Mapping of Benefits and Harms to Persons or Groups

Section 6.8.2.1 States

“For each relevant interested party, the reasonably foreseeable benefits and harms should be analysed. For example, the organization conducting the impact assessment should consider the reasonably foreseeable benefits each relevant interested party can expect as a result of interacting with the AI system.”

In this case, we’d be assessing the impact on people riding in vehicles driven by AI models. This can include:

- Overreliance on autonomous features leading to complacency.

- Confusion or mistrust if the system makes unpredictable decisions.

- Limited understanding of what to do in the event of a system error.

- Reputational risk if the AI system fails in publicized incidents.

- Legal liability in ambiguous or shared-responsibility scenarios.

- Difficulty in tracking, verifying, and enforcing compliance in real-time AI decisions.

With this information, we have covered ISO42001’s suggestion for documentation of AI resources as well as what to address for an impact assessment unique to AI systems. In the second part of this article I will address the final key component, AI System Lifecycles.

Comments